You’ll need to adjust your modulation analyzer routinely to guarantee accurate signal quality assessments, as calibration drift directly affects amplitude accuracy, EVM measurements, and constellation diagram precision. Start by performing visual inspections of connectors, using NIST-traceable calibration hardware, and allowing proper warm-up time. Then verify frequency accuracy, input attenuator settings, and power readings across your operating range. Finally, calibrate demodulator settings like AM depth, FM deviation, and phase detector linearity for both analog and digital modulation. This helps you tell real signal problems from instrument errors and avoid costly troubleshooting.

Understanding Modulation Analyzer Fundamentals and Measurement Capabilities

When you’re testing communications systems, modulation analyzers serve as your primary tool for measuring how accurately transmitters encode information onto carrier signals. These instruments differ from basic spectrum analyzers by demodulating signals to assess modulation quality parameters like error vector magnitude, deviation, and depth.

You’ll encounter three main types: analog modulation analyzers for AM/FM, digital analyzers for PSK/QAM schemes, and vector signal analyzers combining both capabilities with superior dynamic range. Modern vector signal analyzers work as advanced receivers with excellent frequency response. They create precise constellation diagrams and analyze symbols.

Your measurements verify transmitter performance, troubleshoot communication links, and maintain regulatory compliance. When paired with a calibrated signal generator, you’ll establish complete testing solutions that preserve signal integrity across telecommunications, broadcasting, and aerospace applications.

The Critical Impact of Calibration on Signal Quality Assessment Accuracy

One wrong measurement parameter can affect your whole signal quality check. It can make good transmitters look like they fail or hide real performance problems. When your amplitude accuracy drifts by just 0.5 dB, you’ll misinterpret link budgets and make costly transmitter adjustments based on false data.

Incorrect resolution bandwidth settings combined with heightened noise floor readings will corrupt your EVM measurements, leading to failed compliance tests for compliant equipment. Your measurement uncertainty expands exponentially as calibration drift compounds across frequency response, phase linearity, and power measurements.

Without proper calibration techniques applied systematically, you can’t distinguish between actual signal degradation and instrument error. The financial impact extends beyond failed audits—you’ll waste resources troubleshooting phantom problems while genuine issues remain undetected.

Preparing Your Modulation Analyzer for Calibration Success

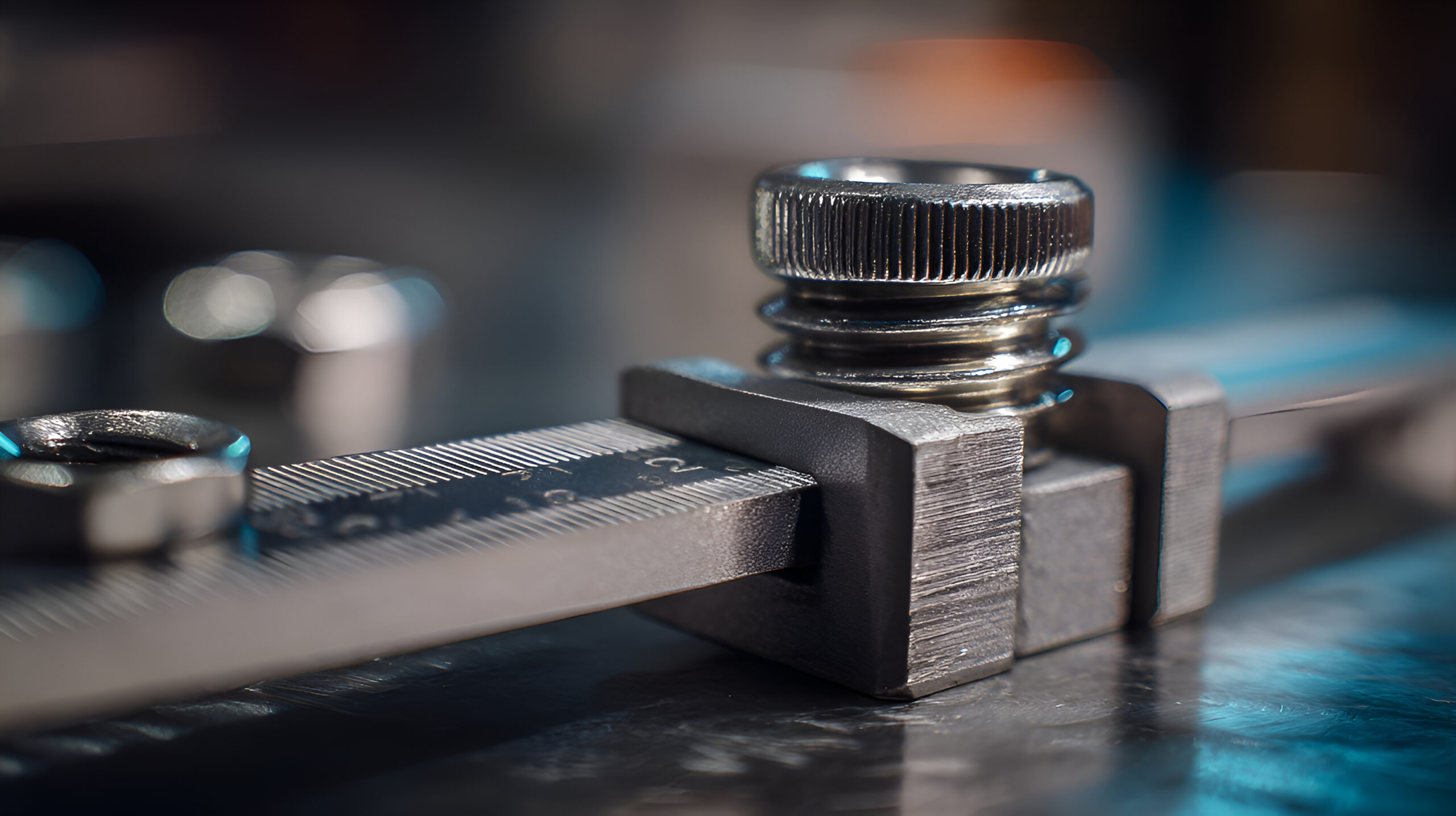

Your modulation analyzer’s calibration accuracy depends entirely on the preparation steps you complete before connecting any reference equipment. Begin with thorough visual inspection of connectors, checking for damage that compromises measurements.

You’ll need calibration hardware traceable to NIST standards and ISO/IEC 17025 requirements—the same precision used in spectrum analyzer calibration and real-time spectrum analyzer verification.

Allow adequate warm-up time, typically 30-60 minutes, ensuring thermal stability before measurements. Document environmental conditions: temperature between 20-25°C and humidity below 70% relative. Configure your analyzer to factory-recommended settings, disabling automatic gain control and setting appropriate frequency ranges.

Verify firmware versions match calibration procedures. Clean all RF connections using approved solvents and lint-free materials. These preparation steps directly determine whether your calibration delivers traceable, reliable results.

RF Input Path and Frequency Calibration Procedures

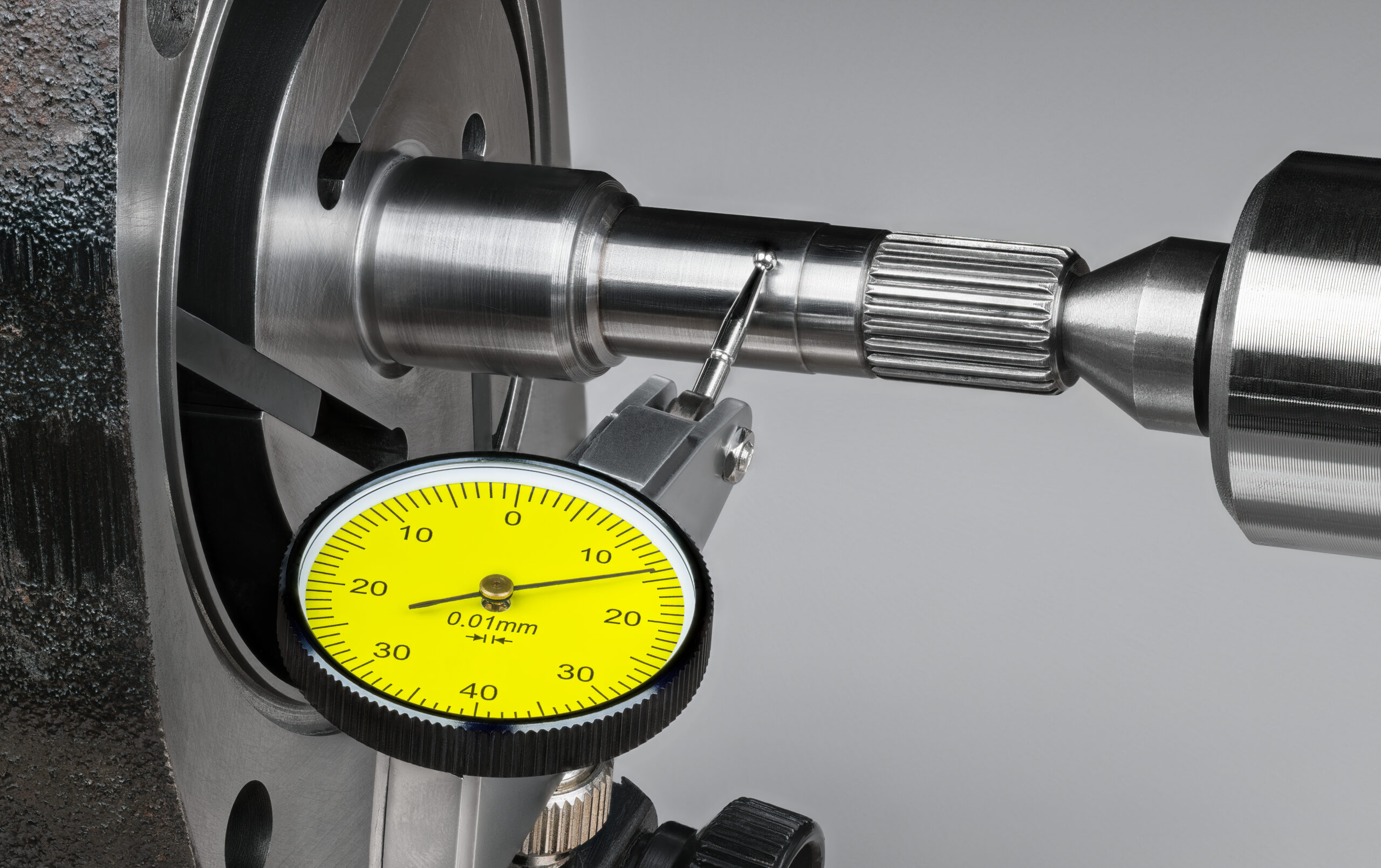

Frequency accuracy forms the foundation of every measurement your modulation analyzer performs, making RF input path calibration your most critical verification step. You’ll need to verify frequency accuracy across your analyzer’s entire operating range using calibrated signal generators at multiple test points.

Start by checking your input attenuator settings at various power levels to guarantee accurate amplitude measurements throughout the dynamic range.

Your calibration process must include power reading verification, typically within ±0.5 dB across all frequency bands. Don’t overlook input impedance and return loss measurements, as these directly affect rf measurements quality. Compare your analyzer’s performance against a swept-tuned spectrum analyzer or other certified rf analysis tools. Document readings at low, mid, and high frequencies to confirm linearity across the full spectrum.

Demodulator Calibration Across Analog and Digital Modulation Schemes

Once you’ve verified your RF input path accuracy, the demodulator circuits require their own distinct calibration procedures to extract modulation characteristics correctly. Your demodulator calibration must address both analog and digital modulation schemes to guarantee accurate signal quality assessment across your entire operating range.

Essential demodulator calibration procedures include:

- AM depth and FM deviation calibration – Verify measurement accuracy across the full modulation bandwidth using precision reference signals traceable to national standards.

- Digital modulation EVM verification – Calibrate error vector magnitude measurements for QPSK, QAM, and OFDM formats to validate constellation accuracy.

- Phase detector linearity testing – Confirm phase measurement accuracy for PM schemes and rf spectrum analysis applications.

- Symbol timing recovery optimization – Adjust clock recovery circuits to maintain synchronization across varying signal conditions and data rates.

Audio, Baseband, and Spectrum Measurement Calibration Techniques

After you calibrate the demodulator to extract modulation features accurately, you must also carefully check the baseband and audio measurement paths. This ensures you can trust the signal quality measurements.

You’ll need to verify audio frequency response flatness across your analyzer’s specified range, typically 20 Hz to 20 kHz. Adjust output level accuracy using precision audio generators, guaranteeing measurements reflect true signal amplitudes. Test total harmonic distortion (THD) and signal-to-noise ratio (SNR) readings against known reference sources to substantiate your analyzer’s baseband measurement capability.

For baseband signals, verify DC offset accuracy and amplitude linearity across the full dynamic range. Spectrum measurement calibration requires testing frequency response flatness, resolution bandwidth accuracy, and noise floor verification. These calibrations make sure your modulation analyzer gives reliable signal quality results for audio and baseband parts. These parts are important for communication system performance.

Verification Testing, Documentation, and Traceability Requirements

Every calibration procedure concludes in verification testing that proves your modulation analyzer meets its specified performance tolerances. You’ll need to confirm both amplitude calibration and linearity calibration against traceable reference standards that maintain an unbroken chain to national metrology institutes.

Your documentation must include:

- Calibration constants applied during adjustments with before-and-after measurement data

- Test results at each verification point showing pass/fail status against manufacturer specifications

- Environmental conditions including temperature, humidity, and warm-up time

- Traceability information linking your reference equipment to NIST or equivalent standards

This documentation guarantees compliance with regulatory standards like ISO/IEC 17025 and demonstrates measurement validity during audits. You’ll also establish calibration intervals based on drift analysis, equipment criticality, and historical performance data.

Establishing an Effective Calibration Management Program

A successful calibration management program is built on three key elements:

- Risk-based scheduling that focuses on the most critical equipment.

- Automated tracking systems to prevent lapses.

- Trained personnel with both technical knowledge and an understanding of quality standards.

You’ll need to categorize your test equipment based on measurement criticality, frequency range requirements, and usage patterns. Your rf engineering team should establish clear protocols for signal analysis documentation and calibration verification.

Some organizations use calibration-free methods to check image quality for certain tasks. However, modulation analyzers need strict traceability. Implement data analytics to optimize calibration intervals, reducing costs without jeopardizing accuracy. Integration with your quality management system guarantees consistency across operations.

Regular training keeps personnel current with evolving standards and measurement techniques, maintaining program effectiveness and regulatory compliance.

Signal Quality Assurance Through Expert Modulation Analyzer Calibration

Modulation analyzers serve as the gatekeepers of signal quality in modern communications systems, making their calibration accuracy fundamental to reliable network performance, regulatory compliance, and confident troubleshooting decisions. By implementing a systematic calibration program that addresses RF input paths, demodulator accuracy, and baseband measurements across all modulation types your systems utilize, you establish the measurement confidence essential for maintaining signal integrity in increasingly complex communications environments.

Regular professional calibration not only verifies current performance but also identifies developing issues before they compromise critical measurements, protecting both your equipment investment and the systems that depend on accurate signal quality assessment. The precision of your modulation analysis directly impacts every decision about transmitter performance, system optimization, and compliance verification—making calibration an operational necessity rather than a maintenance option.

Contact EML Calibration today to leverage their specialized expertise in modulation analyzer calibration, supported by ISO/IEC 17025:2017 accreditation and NIST traceable standards that provide the traceability and credibility your signal quality measurements demand.

With over 25 years of proven experience in communications test equipment and flexible on-site or comprehensive laboratory calibration services, EML Calibration ensures your modulation analyzers maintain the precision and reliability necessary for confident signal quality assessment in mission-critical applications.