You’ll need to establish measurement traceability to NIST standards and guarantee your reference equipment exceeds test set accuracy by a 4:1 ratio. Control environmental conditions while performing frequency stability analysis against cesium references, conducting time domain reflectometry for impedance verification, and calibrating jitter measurements with precision clocks.

Document all procedures with uncertainty calculations, verify error detection capabilities, and corroborate protocol decode functions. Following these thorough steps will safeguard your network’s operational integrity and regulatory compliance.

Understanding Transmission Test Set Equipment and Key Specifications

Every telecommunications professional relies on transmission test sets to measure and verify the performance of communication systems, from basic copper circuits to advanced fiber optic networks. These sophisticated instruments serve as your primary diagnostic tools for signal characterization across diverse telecommunications technologies.

You’ll encounter equipment specifications that define critical parameters including frequency range, amplitude accuracy, and interface compatibility. Modern test sets offer extensive measurement capabilities spanning bit error rate testing, jitter analysis, and protocol verification. Your understanding of these specifications directly impacts test trustworthiness and precision.

When selecting test applications, you’ll need instruments that match your specific network requirements. Whether you’re troubleshooting T1/E1 circuits, validating SONET/SDH performance, or analyzing Ethernet services, proper specification alignment ensures thorough system verification and maintains network integrity throughout your telecommunications infrastructure.

Calibration Standards and Traceability Requirements for Telecommunications Testing

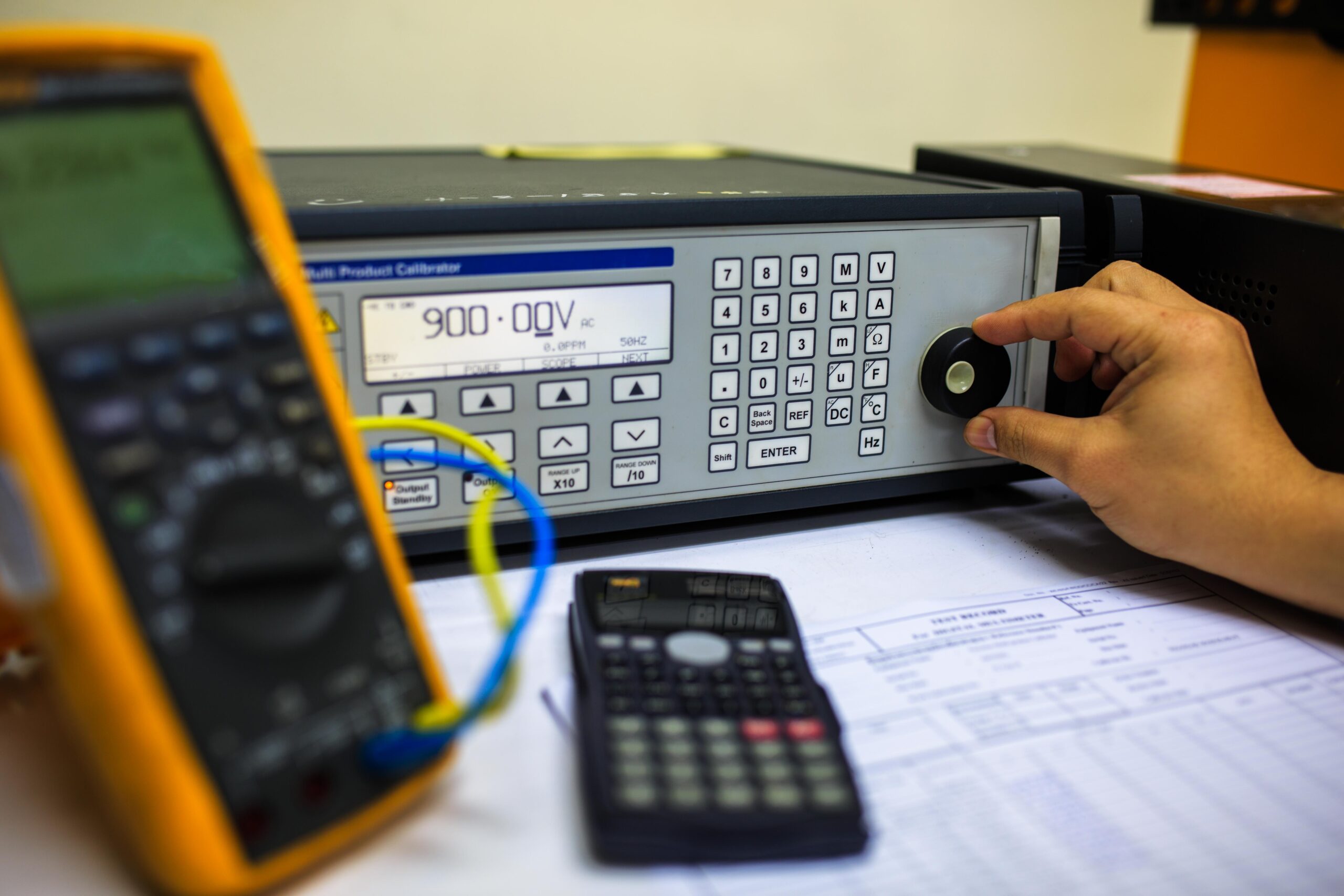

When you’re calibrating transmission test sets, you must establish measurement traceability to nationally recognized standards like those maintained by NIST or equivalent national metrology institutes. This traceability documentation creates an unbroken chain of calibrations linking your equipment’s measurements to primary standards.

Your industry standards overview should cover ITU-T recommendations, IEEE standards, and telecom-specific protocols that govern measurement accuracy requirements. You’ll need strong calibration data storage systems that maintain historical records, uncertainty calculations, and environmental conditions during calibration events.

Implement thorough auditing protocols that verify measurement validity and compliance with regulatory requirements. Your personnel training programs must confirm technicians understand traceability principles, proper documentation procedures, and measurement uncertainty analysis. These elements form the foundation for reliable telecommunications testing and regulatory compliance.

Essential Preparation Steps and Reference Standards Setup

Before initiating any transmission test set calibration, you’ll need to secure appropriate reference standards that exceed your equipment’s accuracy specifications by at least a 4:1 ratio. These standards must maintain current calibration certificates with documented traceability to national institutes.

Environmental considerations require controlling temperature within ±2°C and relative humidity below 60% during calibration procedures. You’ll implement safety protocols by guaranteeing proper grounding, checking power supply stability, and verifying protective equipment functionality before connecting test instruments.

Effective calibration scheduling involves coordinating downtime with operational requirements while allowing adequate warm-up periods for both test sets and reference equipment. Operator training guarantees technicians understand measurement uncertainty sources and proper handling techniques, directly contributing to uncertainty reduction throughout the calibration process and maintaining measurement integrity.

Core Calibration Procedures for Frequency Response and Level Accuracy

With your reference standards properly configured and environmental conditions stabilized, you’ll begin the frequency response calibration by establishing baseline measurements across the transmission test set’s specified frequency range. Impedance matching techniques guarantee accurate signal transfer between your reference generator and the test set under calibration.

Execute these critical calibration steps:

- Frequency stability analysis - Verify oscillator accuracy against your cesium or rubidium reference standard

- Time domain reflectometry - Measure impedance discontinuities and cable losses in transmission paths

- Mixed signal interface testing - Calibrate both analog and digital signal processing circuits simultaneously

- Spurious response identification - Document unwanted harmonics and intermodulation products across the frequency spectrum

Document all measurements with their associated uncertainties, noting any deviations from manufacturer specifications that require adjustment or replacement of internal components.

Advanced Calibration Techniques for Jitter and Protocol-Specific Measurements

Modern transmission test sets require specialized adjustment approaches for jitter measurements and protocol-specific functions that extend beyond basic frequency and amplitude verification. You’ll need to adjust jitter analysis techniques using precision reference clocks with known timing characteristics. Your adjustment must verify error detection capabilities by injecting controlled bit errors and confirming accurate detection rates.

For protocol decode analysis, you’ll validate the instrument’s ability to interpret specific communication standards like T1, E1, or Ethernet protocols. Throughput validation requires testing with known data patterns at various transmission rates. Interface signal quality measurements need adjustment against traceable electrical standards for parameters like rise time, overshoot, and eye diagram analysis. Each protocol demands unique adjustment sequences that match its specific timing and electrical requirements.

Post-Calibration Verification and Documentation Protocols

After completing your calibration procedures, you must verify that your transmission test set performs within specified tolerances through systematic testing protocols. This performance evaluation guarantees your equipment meets quality assurance requirements before returning to service.

Your post-calibration verification should include:

- Functional testing across all measurement ranges to confirm proper operation

- Cross-reference checks using independent standards to validate critical parameters

- Uncertainty computation calculations documenting measurement confidence intervals

- Documentation standards compliance including calibration certificates and test data

You’ll need to secure calibration approval from authorized personnel before releasing the equipment. Maintain thorough records showing traceability to national standards, measurement uncertainties, and any adjustments made. This documentation becomes essential for audit purposes and demonstrates compliance with industry regulations.

Routine Maintenance and Performance Monitoring Between Calibrations

Between formal calibration cycles, you’ll maintain your transmission test set’s accuracy and reliability through systematic monitoring and preventive maintenance procedures. Establish daily performance monitoring routines using built-in self-test functions to verify measurement accuracy and detect drift patterns.

Regular software compatibility updates guarantee optimal functionality with evolving telecommunications protocols and prevent measurement errors from outdated algorithms.

Your hardware maintenance schedule should include connector cleaning, cable inspection, and internal component checks to identify wear before it affects measurements. Environmental impacts like temperature fluctuations, humidity, and vibration require continuous monitoring since they directly influence measurement precision.

Document performance trends to enable failure prediction and proactive component replacement. This systematic approach extends calibration intervals while maintaining measurement confidence and reduces unexpected downtime during critical testing operations.

Selecting Qualified Calibration Service Providers and Accreditation Criteria

When narrowing down calibration service providers for your transmission test sets, you’ll need to verify their ISO/IEC 17025 accreditation and confirm their scope covers your specific equipment models and measurement parameters. Industry accreditation bodies like A2LA or NVLAP provide vital validation of provider competency.

Consider these essential selection criteria:

- Technical Expertise - Verify technician certifications and specialized knowledge in telecommunications test equipment

- Service Flexibility - Assess onsite calibration strategies versus laboratory services based on your operational needs

- Support Quality - Evaluate customer support services, documentation standards, and staff training considerations they provide

- Timeline Management - Confirm calibration turnaround requirements match with your maintenance schedules and minimize downtime

Choose providers who demonstrate measurable uncertainty calculations and maintain current traceability to national standards.

Developing and Implementing Effective Calibration Management Programs

Once you’ve selected qualified calibration service providers, establishing a systematic calibration management program becomes your next priority for maintaining transmission test set reliability. Start by implementing inventory management strategies that track calibration schedules, certificates, and equipment status across your organization.

Develop personnel training protocols guaranteeing technicians understand calibration requirements and can decipher results effectively. Integrate quality assurance processes that verify calibration completeness and accuracy before equipment returns to service.

Deploy data analysis tools to monitor calibration trends, identify recurring issues, and optimize maintenance schedules. Establish customer feedback integration mechanisms to capture field performance data that informs your calibration frequency decisions.

Document all procedures, maintain detailed records, and conduct regular program reviews to guarantee continuous improvement and regulatory compliance throughout your calibration management system.

Transmission Excellence Through Precise Test Set Calibration

Transmission test sets are the backbone of telecommunications infrastructure maintenance, providing the accurate measurements essential for network reliability, troubleshooting effectiveness, and compliance with industry standards. By implementing regular professional calibration that verifies frequency response, level accuracy, and specialized protocol testing functions, you ensure these critical instruments deliver the precision required for maintaining high-quality communication services.

Proper calibration not only prevents costly network downtime caused by inaccurate measurements but also extends equipment life while maintaining the documentation necessary for regulatory compliance and quality assurance programs. The reliability of entire telecommunications networks depends on the accuracy of these test instruments—don’t compromise service quality with inadequately calibrated transmission test sets.

Contact EML Calibration today to benefit from their specialized expertise in transmission test set calibration, supported by ISO/IEC 17025:2017 accreditation and NIST traceable standards. With 25 years of proven experience in telecommunications test equipment and convenient on-site or comprehensive laboratory calibration services, EML Calibration ensures your transmission test sets maintain the precision and reliability that your critical network operations demand.